Using Databricks SQL, preparing time series data for deep learning training can be straightforward.

Data Overview

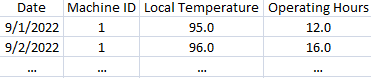

To demonstrate this, we’ll use a simple fictitious dataset. Our goal is to prepare this data for deep learning time series forecasting or classification models and use multiple predictive features. The input data looks as follows:

For a given machine ID, we may want to predict the operating hours in the next day, failure rate, etc.

Databricks SQL Functions

Time series data used in deep learning models such as LSTMs often are required to be in the shape (batch, timestep, feature).

This requires some additional data prep as the desired shape isn’t a simple 2d tabular setup. In the case of our example, for each Machine ID we want to organize a number of features together for each day.

The critical functions in Databricks SQL will be struct, collect_list, and standard partitioning/window functions.

Databricks SQL Setup

Assume our example data is loaded into a table already. Preparing our desired shape with a given number of lookback steps becomes simple:

SELECT

*

,collect_list(struct(

local_temperature,

operating_hours

)) OVER (PARTITION BY machine_id

ORDER BY cal_date

ROWS BETWEEN 90 PRECEDING and CURRENT ROW) as ts_data

FROM delta_table_historicalThis will collect all our historical data for the past 90 days and current into one new column with the data partitioned and ordered correctly. We can store it in a variable spark_df (spark_df = spark.sql(…)) for later use. Note - no gaps should be present in the data and enough history must be available per partition.

Numpy conversion

Once our data is prepared per training instance, we can convert it to a numpy array easily to prepare for deep learning training. This can be accomplished as follows:

import numpy as np

ts_np = np.squeeze(np.array(spark_df.select('ts_data').collect()), axis=1).astype('float32')At this point our data reshaping is complete and can be utilized in a deep learning model!